Reimagining Health Data Governance in the Age of Responsible AI

Opinion Pieces ▪ Dec 24, 2025

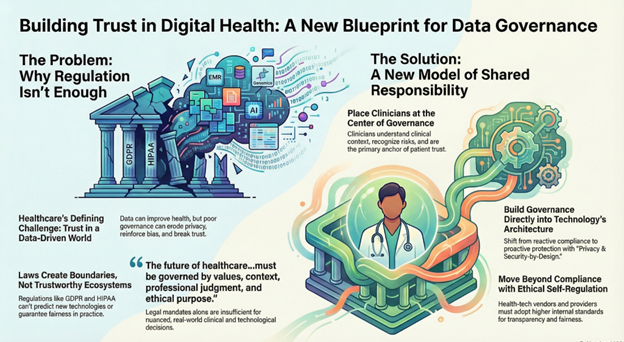

The Trust Gap at the Center of Digital Healthcare

Anyone working in modern healthcare can feel the pace of change. Clinical systems, national data exchanges, AI algorithms, genomics platforms, and remote monitoring tools now produce a constant stream of information—rich, granular, and continuous.

Handled with care, this data can detect illness earlier, tailor treatment plans, and strengthen entire health systems. Mishandled, it becomes a liability—fueling inequities, eroding confidence, and weakening the very relationships healthcare relies on.

The future we are building demands a new model of governance—one shaped as much by values and clinical judgment as by technology. A model that is:

- Led by clinicians

- Anchored in ethics

- Built with privacy and security at the foundation

- Supported by a digitally literate ecosystem

- Reinforced by responsible self-regulation

- Enabled—but not overshadowed—by regulation

Laws can guide us. But trust is earned through the everyday decisions made by clinicians, designers, vendors, and health systems.

Why Governance Has Become a Clinical Imperative

Health data now stretches far beyond the medical record. It includes sensor readings, behavioural indicators, genomic patterns, social determinants, and a growing universe of algorithmic predictions.

When used with intention, these insights help clinicians intervene early and personalize care. But when misused—or simply misunderstood—they can lead to biased outputs, inappropriate automation, and loss of transparency.

Regulatory frameworks such as GDPR, HIPAA, and India’s DPDP Act offer essential protections. What they cannot do is settle the grey areas that arise in real clinical environments:

How should an algorithm’s advice be weighed? What does fairness mean in practice? How do we protect patients across interconnected systems?

These questions demand contextual judgment, not just compliance.

Moving Beyond a Compliance Mindset

Regulations create boundaries, but they cannot guarantee trust. They cannot:

- Predict every technological leap

- Preempt bias in fast-moving AI systems

- Oversee every design decision

- Define responsible behavior in a clinical moment

- Replace the trust built between patients and clinicians

To build systems people believe in, governance must rest on three additional pillars:

- Privacy- and security-by-design

- Digital literacy across the entire health workforce

- Ethical self-regulation by both providers and vendors

These are not afterthoughts; they are structural requirements.

Governance Starts at the Design Table

Strong governance is not a policy binder—it is a design mindset.

Privacy-by-Design Means:

- Collecting only what is truly needed

- Asking consent in a way that’s understandable, not performative

- Ensuring patients can trace how their data moves

- Restricting secondary use instead of encouraging it

- Defaulting to minimisation and purpose limitation

Security-by-Design Requires:

- Zero-trust system architecture

- Strong identity and access controls

- Encryption everywhere, not only in pockets

- Continuous monitoring and early warning systems

- Automated audit trails that cannot be edited retroactively

This shifts organizations from reacting after incidents to preventing them entirely.

Digital Literacy: The Human Foundation of Trust

No matter how sophisticated a system is, people remain the ultimate safeguard. A digitally literate workforce is the backbone of any governance model.

It means ensuring:

- Clinicians can interpret AI outputs with clarity and caution

- Health workers can identify threats or anomalies quickly

- Patients understand the implications of data use and consent

- Communities feel confident navigating digital health tools

- Everyone recognizes the long-term impact of digital footprints

The more informed everyone is, the more trust becomes a shared asset rather than a fragile one.

Ethical Self-Regulation: The Missing Piece in Digital Health

One of the most persistent misunderstandings in health technology is that meeting regulatory requirements equals ethical operation. Anyone who has worked in the field knows that is rarely true.

Responsible Technology Development Involves:

- Being explicit about model assumptions

- Testing for fairness, not assuming it

- Documenting data practices clearly

- Keeping humans in the loop for high-impact decisions

Responsible Vendor Behavior Requires:

- Avoiding unnecessary data collection

- Rejecting opaque, unexplainable algorithms

- Prohibiting hidden commercial secondary use

- Preventing lock-in practices that restrict transparency

Responsible Healthcare Providers Must:

- Establish ethics boards for data-driven decisions

- Conduct routine fairness and safety audits

- Communicate openly with patients when issues arise

- Take accountability when harm occurs

Self-governance is not an alternative to regulation—it is what makes regulation meaningful.

Why Clinicians Must Lead

Clinicians understand the nuances of patient care in ways technology alone never can. They see where data supports decisions and where it risks distorting them.

They can recognize early signs of algorithmic drift or error. They understand the ethical weight behind each data point. And they hold patient trust—the most powerful currency in healthcare.

A strong governance model brings together clinicians, informaticians, ethicists, data scientists, cybersecurity experts, legal advisors, public health leaders, and patient representatives.

That diversity is not bureaucracy—it is protection.

The Capacity Problem: When AI Moves Faster Than Oversight

A new challenge has emerged globally: AI is advancing much faster than the technical capacity to oversee it. This is true across sectors—including government.

Effective AI governance requires deep expertise in transparency methodologies, secure computation, adversarial risk, federated architectures, and AI safety. These skills are in short supply worldwide.

This gap has real consequences:

- Implementation lags behind regulation

- Audits become more complex

- Risks evolve faster than oversight frameworks

- Compliance becomes procedural rather than meaningful

- Vendors interpret ambiguous requirements differently

- Patients and clinicians look for clearer accountability

This isn’t a failure—it’s the reality of rapid innovation. But it reinforces the need for shared responsibility:

- Governments set standards and accountability pathways

- Clinicians and health systems bring contextual judgment

- Vendors commit to transparency and responsible practices

- Technical communities support safe innovation

- Patients remain informed participants

No single institution can safeguard digital health alone.

When Technology Becomes a Partner in Governance

When used responsibly, emerging technologies can make governance scalable:

- Federated learning protects privacy by training models without moving raw data

- Permissioned blockchains strengthen auditability and consent tracking

- Differential privacy and secure multiparty computation allow safe analytics

- Zero-trust architectures limit breach potential

- Fine-grained access control ensures data is accessed only when truly necessary

Technology cannot replace ethics, but it can reinforce ethical systems at scale.

A Governance Blueprint for the Global South

India stands at a unique crossroads: a modern data protection law, a powerful digital public infrastructure (ABDM), and a thriving health-tech ecosystem.

This moment offers a chance to shape a globally relevant governance model—one that:

- Embeds ethics and privacy from the start

- Strengthens digital literacy throughout the workforce

- Demands transparency from all digital health vendors

- Puts clinicians at the heart of decision-making

- Leverages public digital infrastructure as a foundation

- Shows how law and self-governance complement each other

The Global South does not need to follow global norms—it has the opportunity to set them.

The Real Measure: Trust

The value of a digital health system isn’t determined by the sophistication of its technology. It is measured by the trust it earns—and the trust it sustains.

Trust grows through:

- Ethical, human-centered design

- Clinical leadership

- Transparent governance

- Responsible innovation

- Widespread digital literacy

Regulation sets the boundaries. Values, judgment, and design choices determine whether the system within those boundaries is safe.

Healthcare may increasingly rely on data, but at its core, it remains profoundly human. The real challenge—and opportunity—is building governance that honours that truth.

A Call to Action Ahead of GLC4HSR Annual Conclave 2026

As we approach GLC4HSR Annual Conclave 2026, health data governance must move from a technical topic to a strategic one.

This requires:

- Policy makers developing sector-specific guidance

- Clinicians stepping into leadership roles in data stewardship

- Technologists designing for transparency and fairness

- Legal and ethical experts helping navigate inevitable trade-offs

- Patient groups grounding decisions in lived reality

- Health systems investing in governance infrastructure—not just new tools

The decisions we make now will determine whether digital health systems become engines of trust—or sources of risk.